|

openwallpaper

|

|

openwallpaper

|

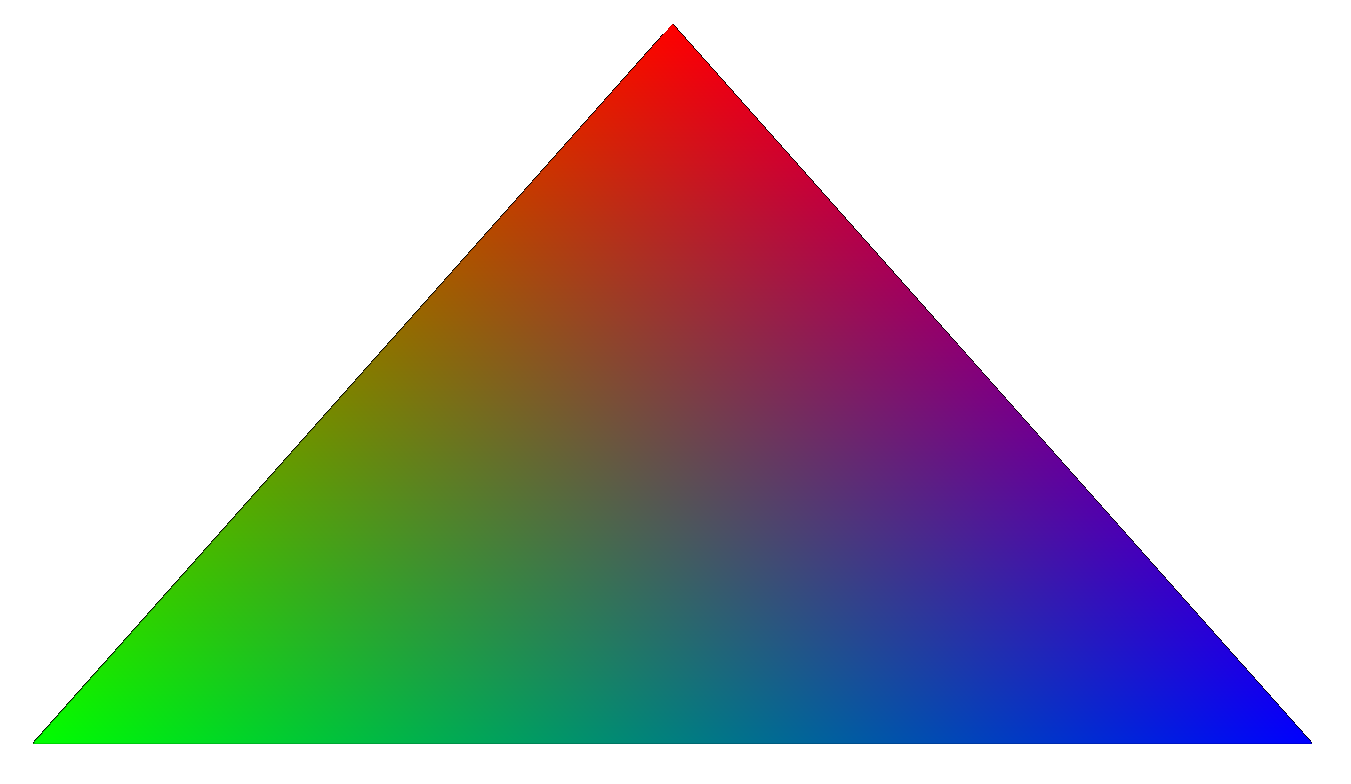

Drawing a triangle is the "Hello, world" of graphics programming. It may seem overcomplicated if you didn't work with graphics APIs before. If so, think about why it's designed like this and could not be simplified, and you will understand it easily (this also works with any other programming concept).

First, let's describe vertex data that we will send to the GPU. In triangle, we have 3 vertices, for each of them let's store a position in 2D space (x, y: float) and RGB color (r, g, b: float). GPU works in normalized device coordinates (NDC), where bottom left corner has (-1, -1) coordinates, and top right has (1, 1) coordinates. We will use the same coordinate system in our data.

After we have vertex data, we need to upload it to the GPU so we can use it for drawing. To do this, we need to create a vertex buffer – a chunk of data in GPU memory, and copy our data to it. We can copy only inside a copy pass, which is an abstraction around GPU batching – all the copy calls inside one pass are sent to the GPU at once.

Then, we will need to create a vertex shader and a fragment shader. Shaders are small programs that run on GPU for multiple data in parallel and quickly perform operations on it. Shaders are written in GLSL language and compiled into platform-independent SPIR-V bytecode that you can use with OpenWallpaper.

Vertex shader is used to transform vertex data from the buffer into the final vertex position on the screen. For example, it can quickly transform world-space coordinates into screen-space, so you don't have to update all the vertex data on the CPU when camera moves. In our case, we just pass through coordinates and color.

vertex.glsl:

After vertex shader is ran for each vertex, its output vertices form triangles. These triangles are rasterized, i.e. converted into a set of pixels on the screen, and a fragment shader is ran for each of these pixels. Fragment shader should calculate and return a final color of a pixel.

Additional vertex shader output (in our case, v_color), is passed to fragment shader as input. But vertex shader is ran for each vertex, and fragment shader is ran for each pixel. So the value we see as an input of fragment shader is linearly interpolated from values of 3 vertices forming a triangle, where the closer you are to vertex, the higher its value weight is. This interpolation gives us a nice color gradient on our triangle.

fragment.glsl:

To compile shaders into SPIR-V bytecode, install glslc and run:

Put resulting vertex.spv and fragment.spv files into scene.owf archive so you can load them from scene module. Load them with OpenWallpaper API:

You also need to create a pipeline object, that puts together all the draw call information.

Here we:

We're initialized all the necessary stuff in init(), and now we're ready to do repeated draw call in update(). To do this, we use ow_render_geometry, binding pipeline object and vertex buffer, saying that we want to draw 3 vertices starting from 0 in 1 instance (described later). We do this in a render pass, which is a set of draw calls that is sent in batch to the GPU. In the beginning of render pass, we also clear the screen with black color.

You may look at the final code in triangle example.